INTRODUCTION

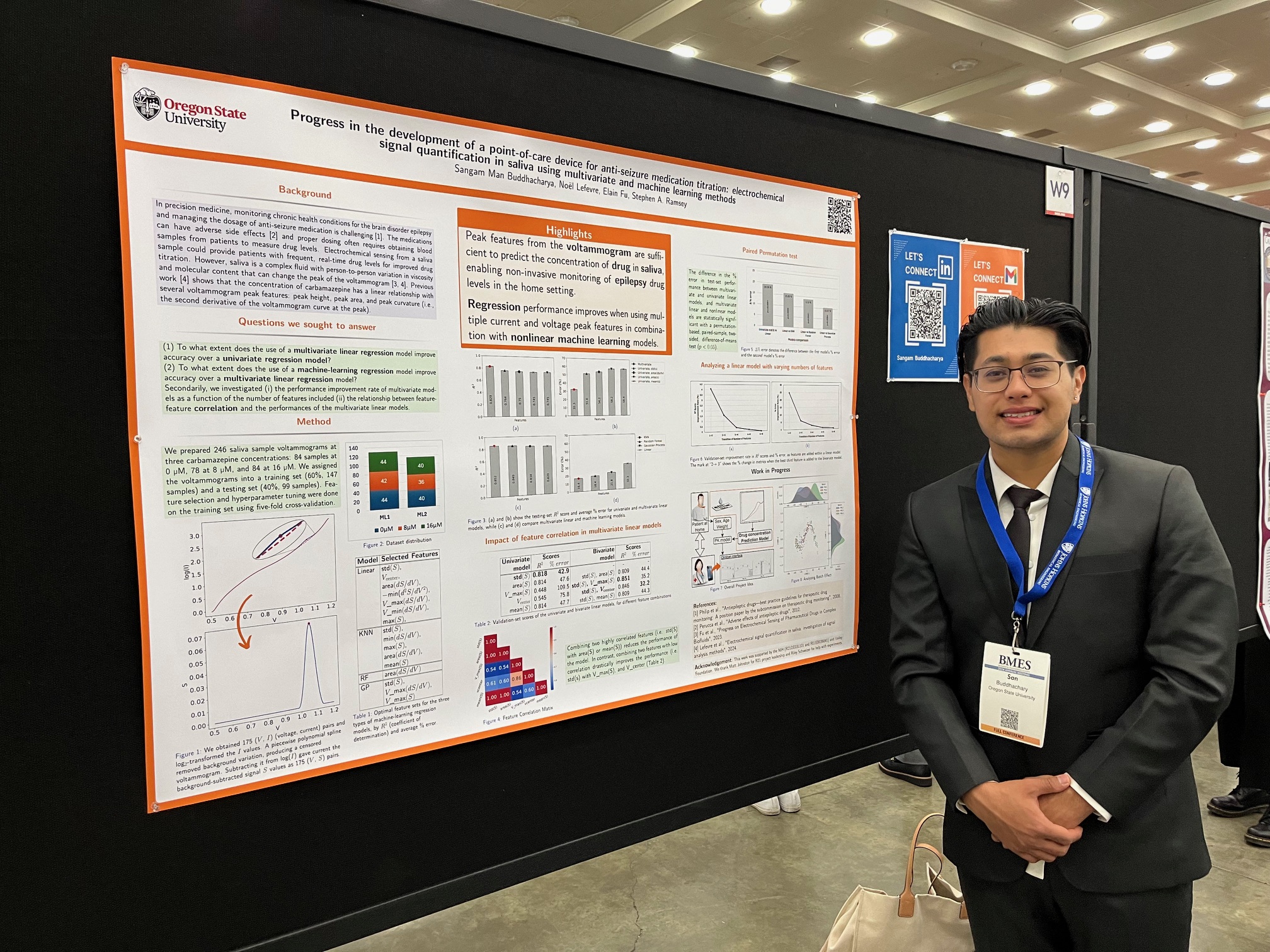

I'm Sangam Man Buddhacharya

I'm a unique mix of introverted mystery and talkative charm—if we chat a lot, welcome to my inner circle! As a machine learning maestro, I craft pipelines that tackle real-world challenges. My toolkit spans machine learning, deep learning, statistical models, and visualization, and I excel at deploying them with MLOps best practices.

With experience in data science, computer vision, and NLP projects, I stay ahead by updating my AI knowledge weekly via Twitter, LinkedIn, and research papers. I'm laser-focused on making my mark as a Machine Learning Engineer, AI Engineer, and Data Scientist.

Let's create something amazing together!

Expected graduation date: June 2025

4+

AI/ML/Data Science:YEARS OF EXPERIENCE

3+

INDUSTRYYEARS OF EXPERIENCE

7+

PROJECTS INProduction

Biography

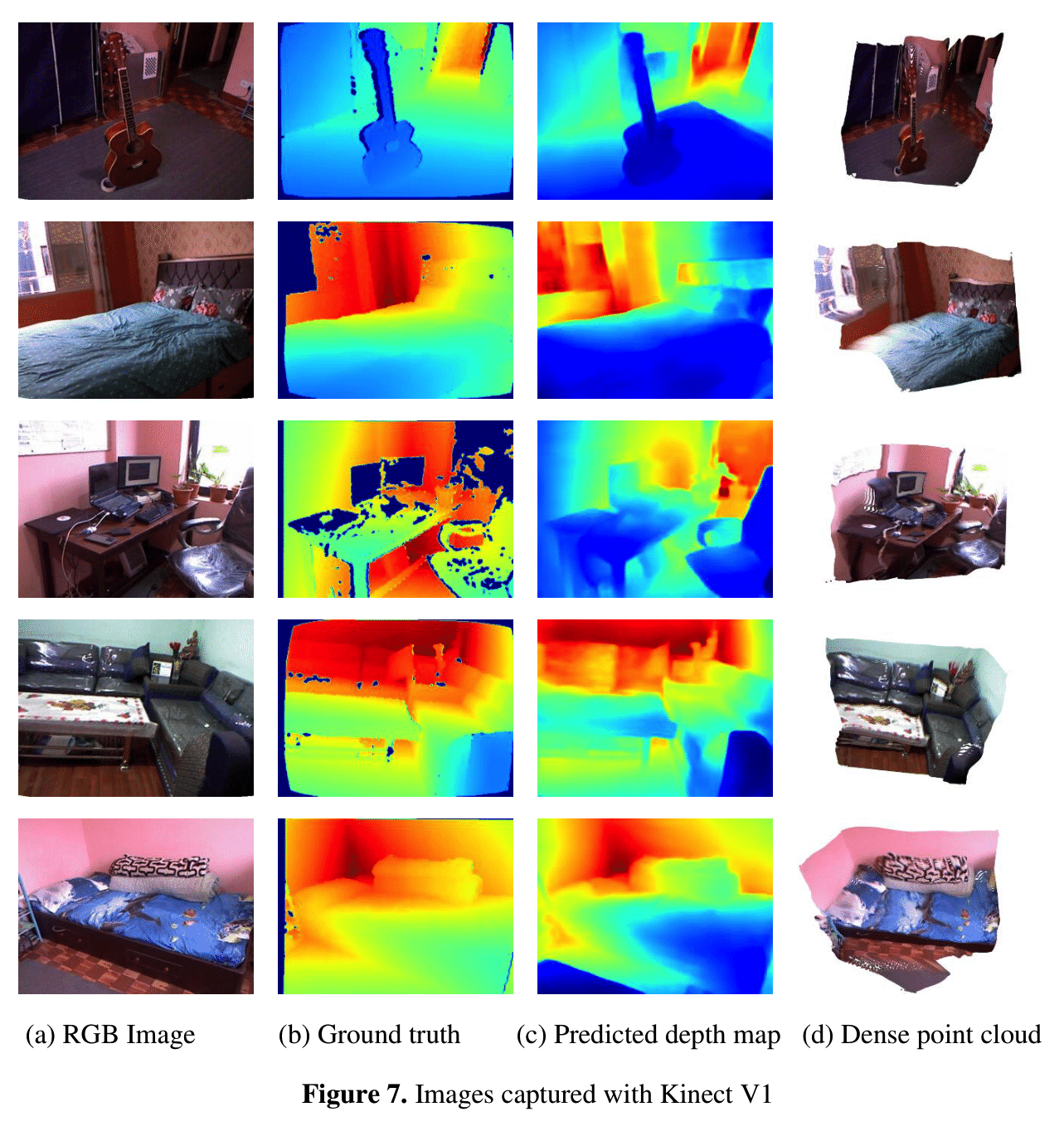

I began my journey in data during my undergraduate years, which introduced me to the exciting field of machine learning. Seeing computers learn from experience and examples ignited my passion for data and machine intelligence. My major project involved estimating image depth using a CNN encoder-decoder network, where I implemented a multigrid attention network, reducing error by 3%, leading to a published paper.

My professional path began as an Associate Data Engineer at Deerwalk (Cedargate), where I honed my database, SQL, and Excel skills. From there, I moved up to Data Scientist at Selcouth Technology, a startup, leading a team of five to build a deep learning pipeline for clothing ad recommendations by detecting similar items from video reels. We published a research paper during this time, marking another milestone.

I then joined Artlabs as a remote Data Scientist, working with over five global clients and tackling diverse challenges. Simultaneously, I freelanced, helping companies like Marbazzar, Geligulu, and ProTracker address their unique business needs with machine learning solutions. Currently, I am pursuing a master’s degree in Computer Science at OSU and contributing to research as a graduate assistant.

Education

Dual Master in Computer Science and Artificial Intelligence (GPA: 3.96/4)

Reasearch Assistant with Prof. Dr. Stephen Ramsey

Oregon State University

Expected Graduation Date: June 2025Bachelor in Electronics and Communication Engineering (GPA 3.97/4)

Magna Cum Laude Distinction

Pulchowk Campus, Tribhuvan University

Dec 2021Experience - Timeline

A short summary of my work experience..

-

2023 - Present

Oregon State University,

Corvallis, ORI currently work as a Graduate Research Assistant at Ramsey Laboratory in the field of Machine Learning and Biology.

-

2023

Marbazzar, San Francisco, CA

Before coming to United States, I worked remotely as a Freelancer Machine Learning Engineer for two months. As a part of the team, I developed a pipeline to generate 3D Avatar of the human using a Single image of a person.

-

2023

geligulu (Freelancer), Remote Work

I worked as a Machine Learning Freelancer and developed an Automatic Dimension Measurement System. I designed the computer vision pipeline, trained deep learning models, and created a fully functioning Django web application. Additionally, I deployed the project on an AWS server using NGINX, managed the MySQL database, and automated the QR scanning process.

-

2023

Upwork (Freelancer), Remote Work

I worked as a Machine Learning freelancer on Upwork, developing various machine learning pipelines for industry projects like an automatic analog gauge meter reader, 3D room reconstruction, vehicle speed calculation, litter detection, and more. This opportunity allowed me to solve numerous real-world challenges while working on diverse projects for clients worldwide.

-

2022 - 2023

Artlabs, New York, NY

As a Data Scientist (Remote), I designed LLM pipelines and web APIs for an AI-girlfriend chatbot using Langchain, ChatGPT, and FastAPI. I implemented NLP to create a web app that provides automatic art news summarization for tailored updates to art enthusiasts. For the TrakTrain client, I improved the music recommendation system, achieving a 63% increase in top-10 similarity accuracy, from 40%. Additionally, I developed an automatic UV wrapping tool for the RendezVerse client, reducing the manual UV texture mapping time for 3D designers by 20%.

-

2021 - 2022

Selcouth Technologies, Chitwan, Nepal

I worked as an Data Scientist and built a computer vision pipeline to recommend online clothing ads on Flipkart based on clothes from movies and short videos. I increased the approval rate from the ShareChat (MoJ) client from 51% to 83% within six months. Additionally, I designed a frame selection algorithm that decreased the false acceptance rate (FAR) by 4% and increased image retrieval accuracy by 7%. I also applied a human tracking system to solve the problem of identifying main characters for recommending unique clothing items.

-

2021

Cedargate (Deerwalk),

Lalitpur, NepalAs an Associate Data Engineer, I developed algorithms to transform data into actionable information. I interpreted and analyzed data using statistical techniques such as the Pearson correlation coefficient, K-Means Clustering, and PCA. Additionally, I standardized diverse data sources by mapping fields to a common schema and establishing a standardized vocabulary.

-

2021

NAAMII,

Lalitpur, NepalI worked as a Teaching Assistant at the 3rd Nepal Winter School in AI, where I guided participants in writing deep learning code and setting up frameworks such as TensorFlow, Keras, and OpenCV. I clarified fundamental concepts, including backpropagation, convolutional operations, pooling layers, and activation functions, for the novice group. Additionally, I introduced generative adversarial networks (GANs) to the advanced beginner group.

-

2020

LOCUS,

Lalitpur, NepalAs Hardware Coordinator, I organized hardware events, including RoboWarz, Dronacharya, and RoboPop, and led the national-level Locus 2020 competition with over 120 teams. I secured $8695.65 in sponsorships. I conducted a 15-day workshop on C, Python, and Arduino for 70+ freshmen, encouraging project development. Over 35 freshmen participated in LOCUS 2020 with their projects. I mentored three projects: Hand Gesture Recognition, Face Recognition for Door Security, and Medical Drone Service, with the latter winning the instrumentation title.

-

2016 - 2021

Pulchowk Campus,

Lalitpur, NepalAs a Research Assistant on a UGC Collaborative Research Grant under the supervision of Dr. Sanjeeb Prasad Panday, I conducted experiments to determine depth from a single image. I compared the advantages of monocular depth estimation against traditional methods such as LiDAR, Kinect, and stereo vision. Additionally, I explored the feasibility of generating 3D point clouds from drone images for surveillance in disaster-stricken areas. This research culminated in a published paper in a scientific journal.

Competencies

Technical Competencies

Here are the technical skills I have acquired throughout my computer science career. While I don't claim to be a master of all these skills, I am confident in my ability to hold a position that utilizes any of these competencies and to further develop my expertise as needed.

Languages

Python, C++, C, JavaScript, MySQL, HTML, CSS, MATLAB

Coding Methodologies

Test-Driven-Development, Agile, Clean Code, Useful Comments, Ability to adhere to ISO and other standards

Project Management

SCRUM, GitHub, Jupyter Notebooks, Presentation Skills, Team Leadership/Management, Cross-Team Communication, Sense of Humor

Interests

Machine Learning

Data Science

Computer Vision

Data Visualization

Natural Language Processing

Generative AI

MLOps

Software Engineering

Software Development

Relevant Courses Taken

Algorithm (CS 514)

Machine Learning

Deep Learning

Natural Language Processing

Operating Systems

Artificial Intelligence

Project Management

Software Engineering

Database Management System

Big Ideas in AI

AI Ethics

Calculus I - III

Discrete Structures

Frameworks

NumPy, Scikit-Learn, TensorFlow, Keras, PyTorch, Matplotlib, OpenCV, Pandas, SciPy, Hugging Face, spaCy, NLTK, Langchain, Django, Flask, FastApi, Streamlit

Portfolio

My Projects

See my GitHub for actual details on the following projects. Due to certain agreements, I cannot share the GitHub links for most of the projects that are currently in production.

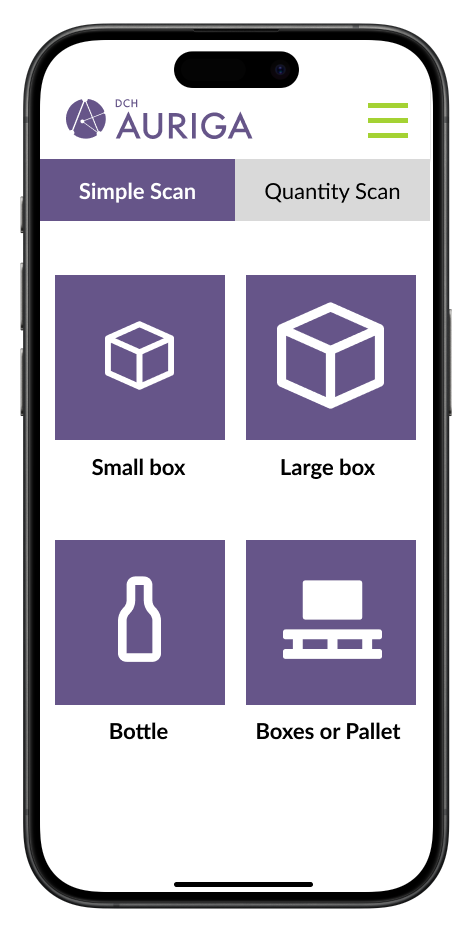

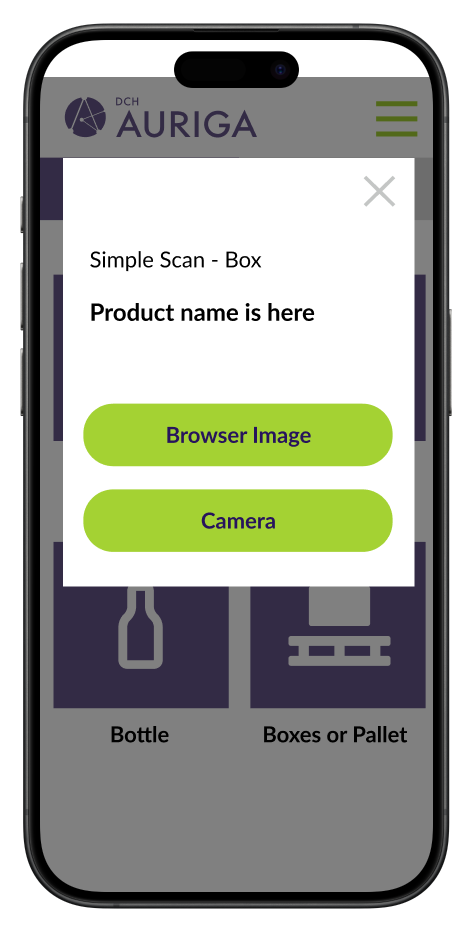

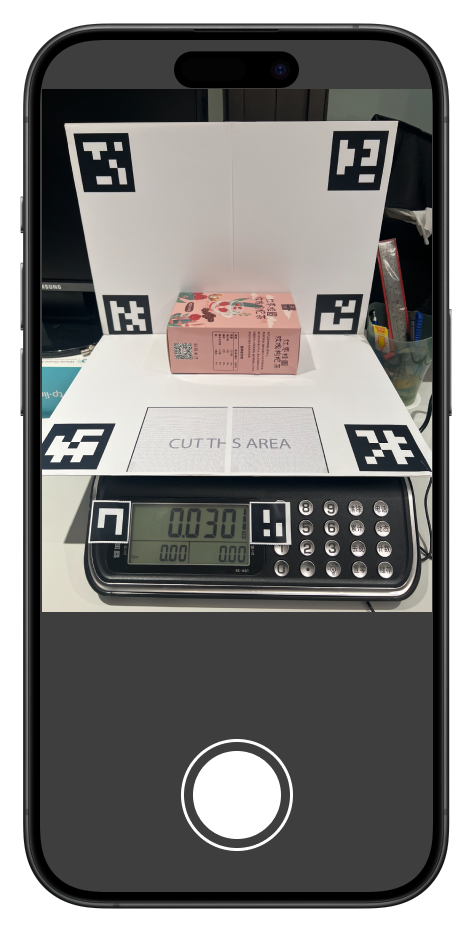

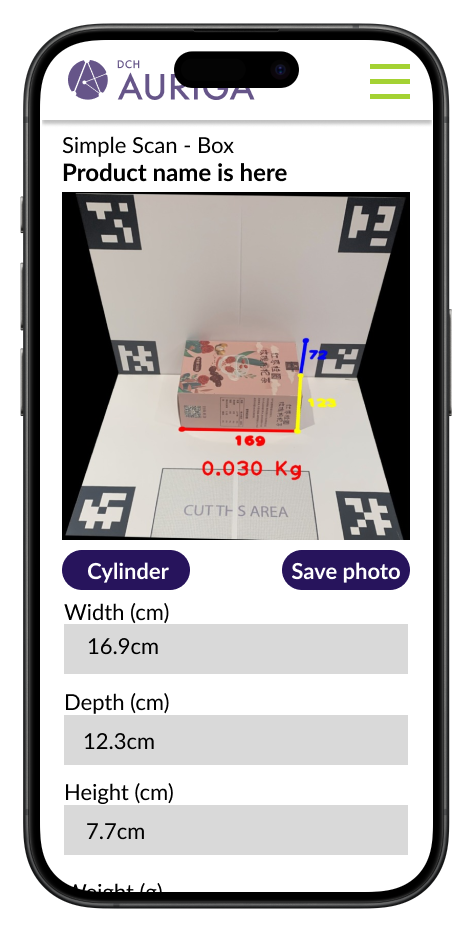

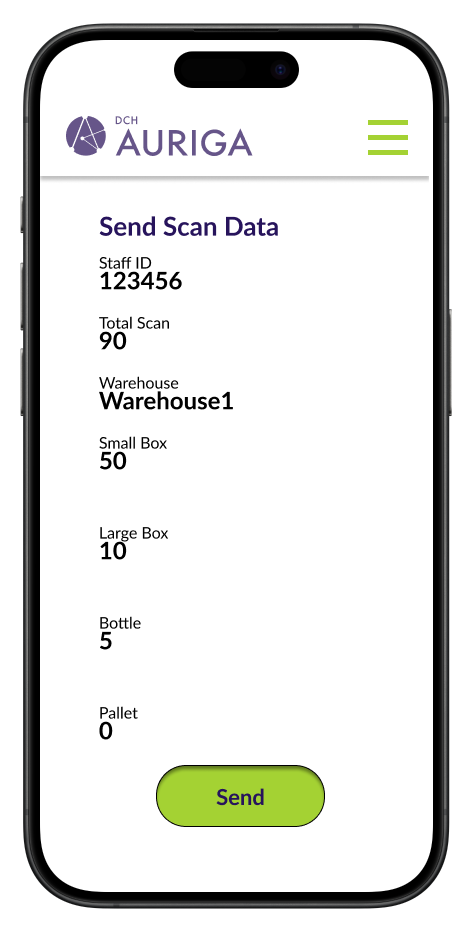

Automatic Dimension Measurement System

I designed and developed a fully functional pipeline for our client, DCH Auriga, to measure the dimensions and weight of box and cylinder objects from an image using various computer vision and machine learning algorithms. Additionally, I created both the front-end and back-end of a web application equipped with a camera-enabled feature to scan QR IDs and take pictures of the boxes. In our setup, we used ArUco markers as references for real-world measurements, placing the box on a weighing machine surrounded by these markers.

I used technologies such as background removal, keypoint detection and homography transformation, contour detection, and computer vision filters to measure the dimension of the box. Similary, to detect the digits and classify them, I used Yolo v8 object detection. I also create a daily cronjob on the server to regularly send and email to each warehouse the scan details of the box.

This solution simplified warehouse operations by automating dimension measurements, reducing operating costs by 30% and time by 50%, eliminating the need for manual labor, and accurately estimating the optimal quantity of boxes for storage.

SKILLS:

- Machine Learning, Computer Vision, Deep Learning, Data Annotation, MLOps

- Python, HTML, JavaScript, CSS

- TensorFlow, OpenCV, Django, Numpy, Pandas

- API Request, SQL, AWS Deployment

- GitHub, Project Managment, Team Work

Video to Online Shopping

Matched the clothes from MoJ videos, and movies to similar items in an online shop (Flipkart). Similar (category, color, pattern) product ads as video items were recommended from an online shop. Most people wants to buy the same product that they see in the movies and tik-tok. How do they search those product in e-Commerce site? Obviously, typing all the requirements in the search bar, Example: “red shirt with logos in the middle”, with this you end up with different product. Only the text search available in the e-Commerce lowers the purchase rate since most of the user don’t find what they actually searching for. But with the advancement of deep learning and computer vision automatic searching has been made easier. Video to Online Shopping automatically detects the central character in the video and automatically search for the product in the database. No any hassel to search, type, and open e-commerse site since the product will be available next to you in the video.

SKILLS:

- Machine Learning, Computer Vision, Deep Learning, MLOps

- TensorFlow, PyTorch, OpenCV, Django, Numpy, Pandas

- Web Scrapping, Data Augmentation, Data Filtering

- Django, API Request, SQL, AWS Deployment

- GitHub, Project Managment, Team Work

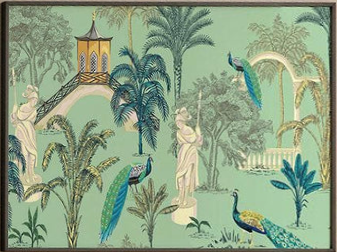

Artwork Segmentation

I developed a deep learning pipeline for our client to segment artwork, designs, and logos from backgrounds such as walls and surfaces. This pipeline works with any orientation of the artwork by detecting the perpendicular of the artwork and performing a homographic transformation to preserve the aspect ratio of the painting. Additionally, it can classify whether the artwork is a rectangular or irregular object. Our client used our algorithm to provide an auto-select feature in the their app, enabling accurate extraction of artwork from different angles while maintaining the real dimensions of the art. The extracted artwork is then used to decorate both real-world and virtual rooms within the application.

SKILLS:

- Machine Learning, Computer Vision, Deep Learning, Landmark Detection

- TensorFlow, PyTorch, OpenCV, Flask, Numpy, Pandas

- Data Augmentation, Data Filtering, Data Annotation

- GitHub, Project Managment

Tennis Game Analysis

I worked with the team at Fieldtown Software to create a machine-learning pipeline and an API for the ProTracker iOS app. This system automatically detects the players, the ball, the court, and the corners. It tracks the distance traveled by the players, detects the ball's bounce on the ground, and classifies it as "IN" or "OUT" according to tennis rules. Additionally, it identifies if the ball is served, a forehand, or a backhand shot, and records the total distance traveled by the players.

We developed a pipeline for tennis game analysis using deep learning techniques (CNN, LSTM, Neural Network) to calculate players’ scores, visualize ball bounce points, and predict three types of strokes: backhand, forehand, and serve. This improved the depth and accuracy of tennis match analysis by 15%, providing players, coaches, and enthusiasts with valuable insights into player strategies and performance outcomes.

SKILLS:

- Machine Learning, Computer Vision, Deep Learning

- TensorFlow, PyTorch, OpenCV, Flask, Numpy, Pandas

- Data Augmentation, Data Filtering, Data Annotation

- GitHub, Project Managment

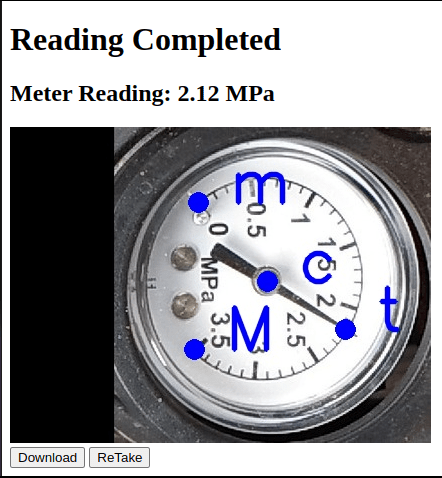

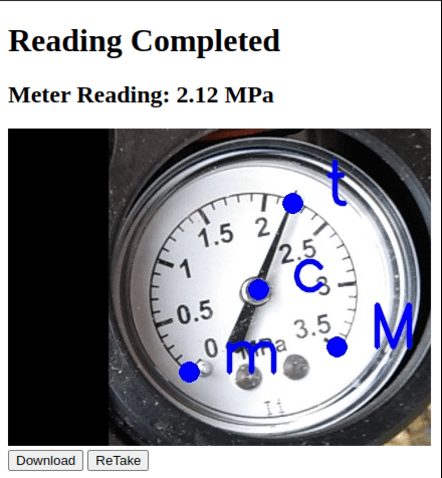

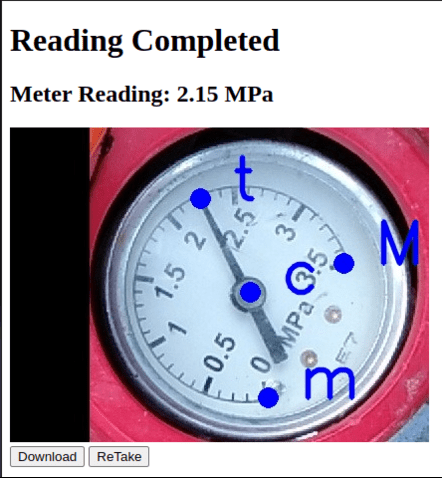

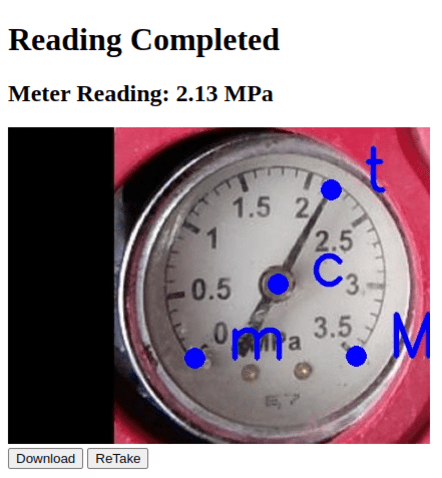

Analog Gauge Meter Reading

I created a machine learning algorithm that uses a camera to read analog gauge meters and automatically record the readings on a server. This is particularly useful in industries that still use analog systems, where previously, a person had to manually read and enter the meter values for data analysis. My system automates this process by accurately calculating the pressure readings. The technologies I used include object detection, keypoint detection, computer vision filters, homographic transformation, and cosine geometry.

I created a simple Flask app to test reading from the real gauge meter. However, in production, the machine learning model is deployed as an API that takes an image of the gauge meter as input and returns the MPa value.

SKILLS:

- Machine Learning, Computer Vision, Deep Learning, Landmark Detection

- TensorFlow, PyTorch, OpenCV, Flask, Numpy, Pandas

- Data Augmentation, Data Filtering, Data Annotation

- GitHub, Project Managment

Publications

Machine Learning Publications

Here is a list of my publications in machine learning as the first author.

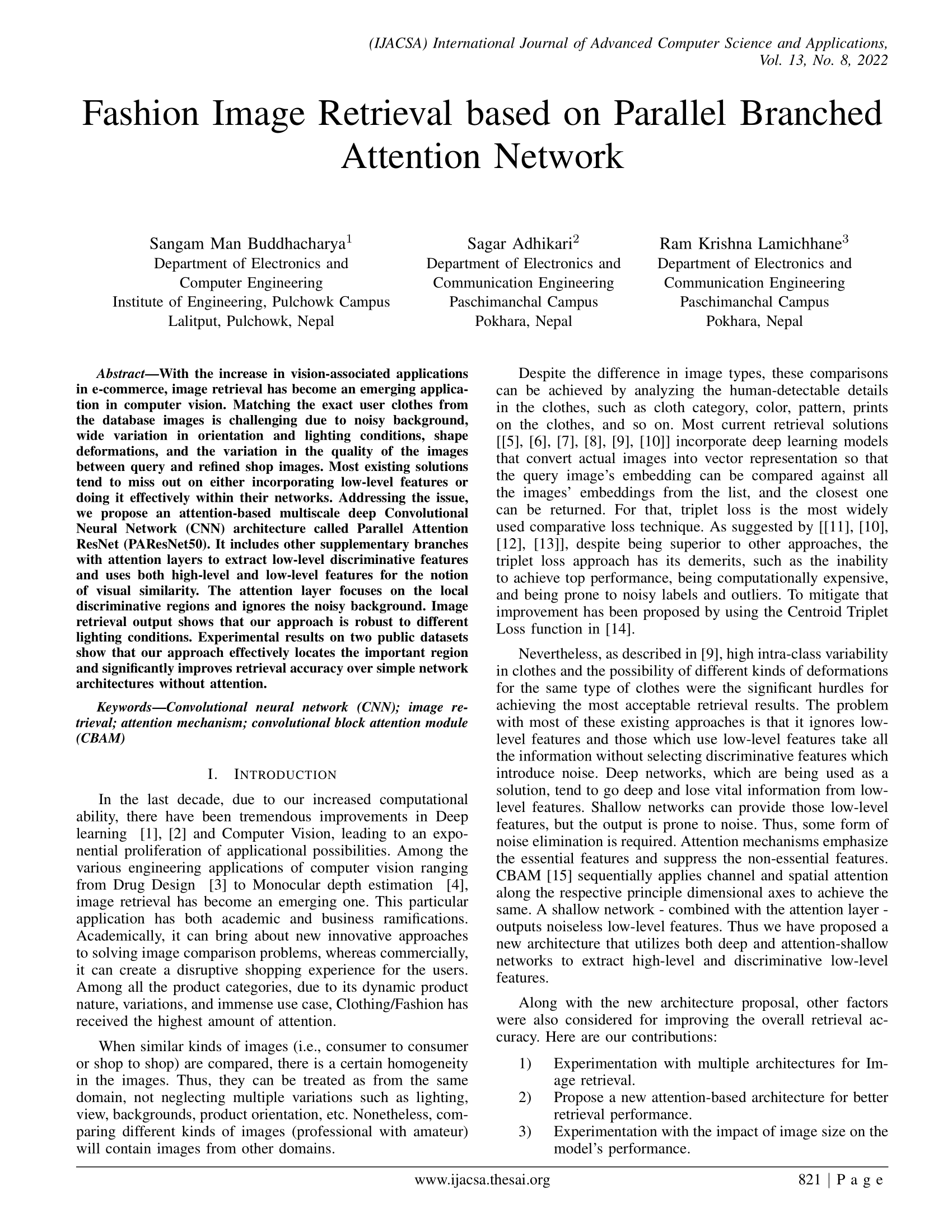

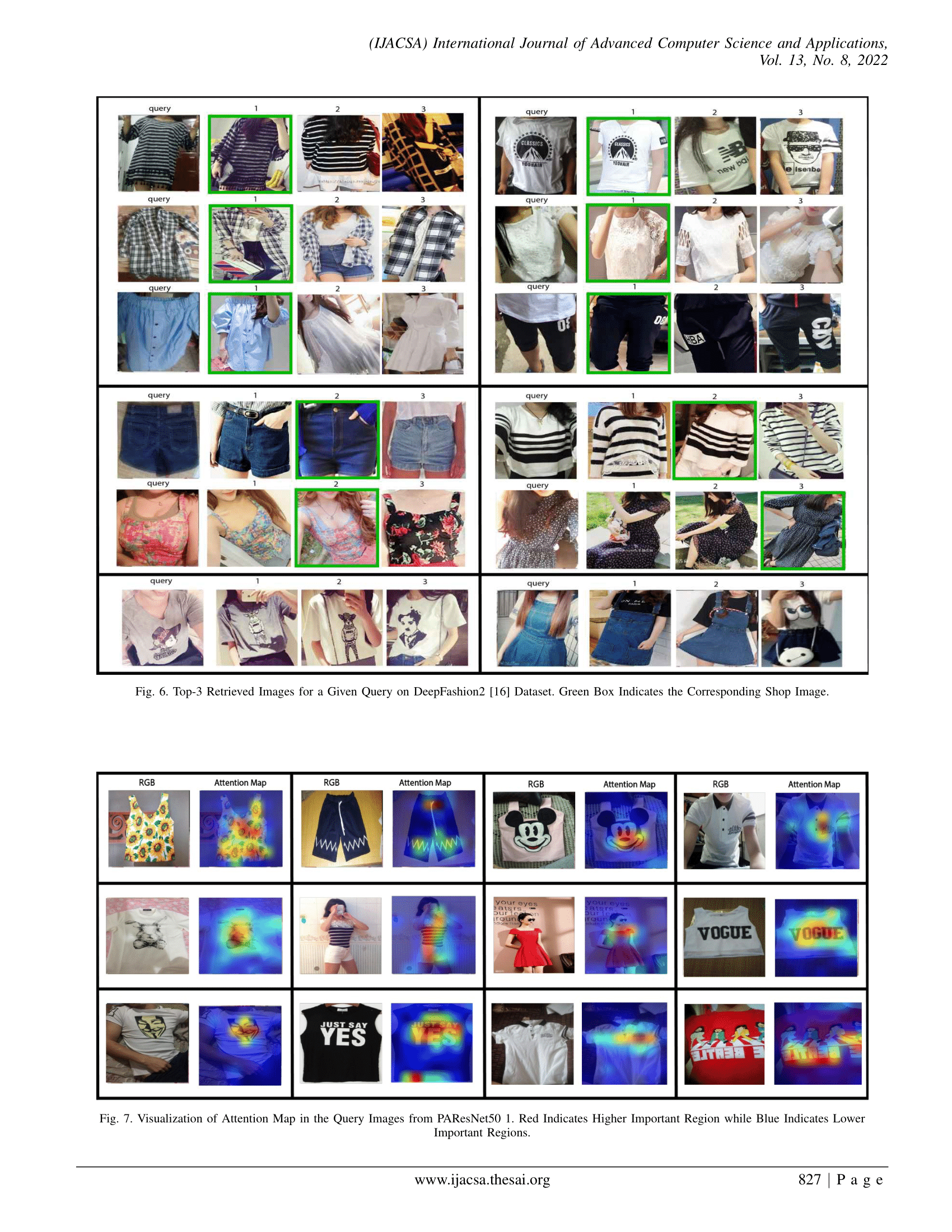

Fashion Image Retrieval based on Parallel Branched Attention Network

With the increase in vision-associated applications in e-commerce, image retrieval has become an emerging application in computer vision. Matching the exact user clothes from the database images is challenging due to noisy background, wide variation in orientation and lighting conditions, shape deformations, and the variation in the quality of the images between query and refined shop images. Most existing solutions tend to miss out on either incorporating low-level features or doing it effectively within their networks. Addressing the issue, we propose an attention-based multiscale deep Convolutional Neural Network (CNN) architecture called Parallel Attention ResNet (PAResNet50). It includes other supplementary branches with attention layers to extract low-level discriminative features and uses both high-level and low-level features for the notion of visual similarity. The attention layer focuses on the local discriminative regions and ignores the noisy background. Image retrieval output shows that our approach is robust to different lighting conditions. Experimental results on two public datasets show that our approach effectively locates the important region and significantly improves retrieval accuracy over simple network architectures without attention.

KEYWORDS:

-

Convolutional neural network (CNN), image retrieval, attention mechanism, convolutional block attention module (CBAM)

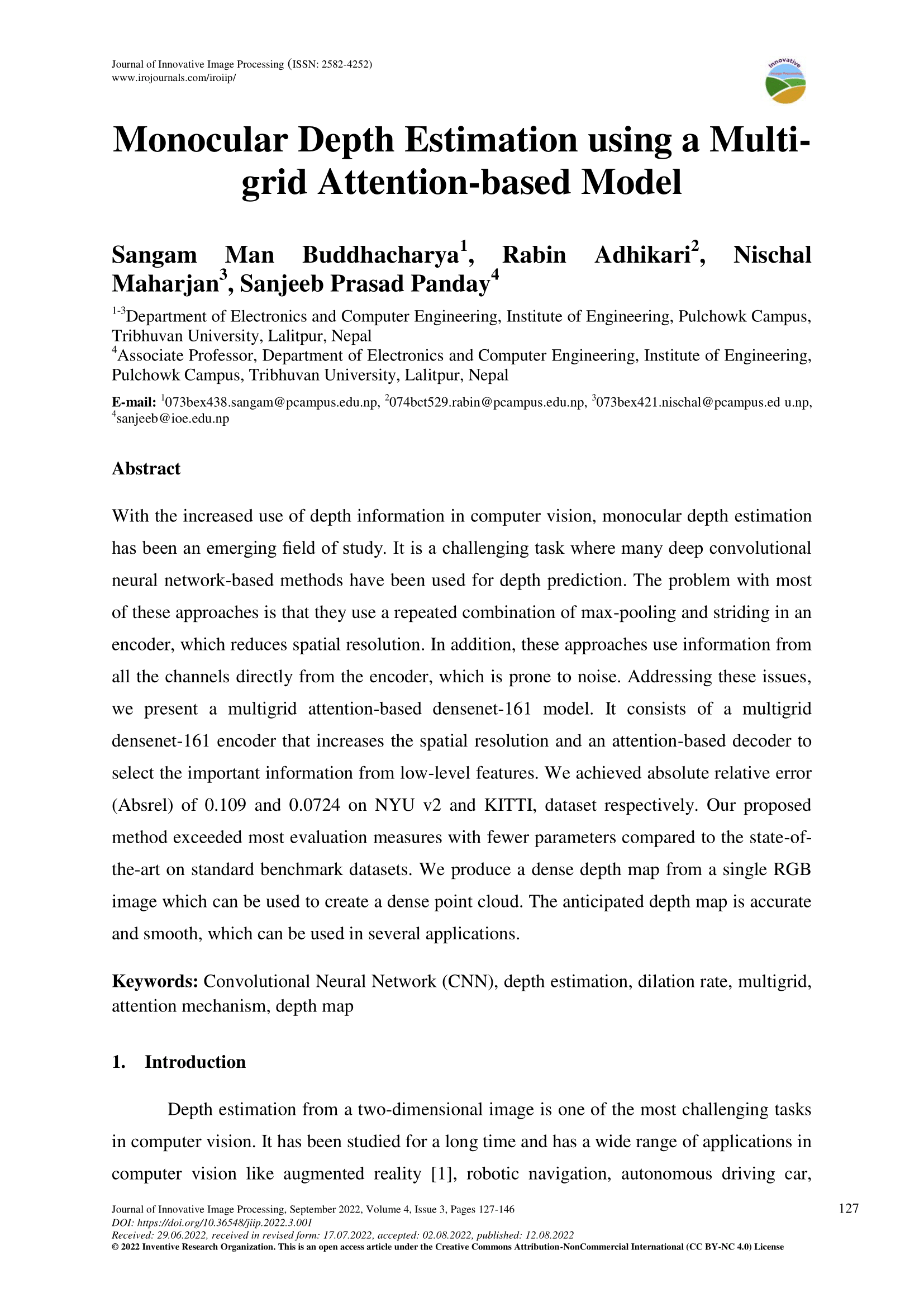

Monocular Depth Estimation using a Multi-grid Attention-based Model

With the increased use of depth information in computer vision, monocular depth estimation has been an emerging field of study. It is a challenging task where many deep convolutional neural network-based methods have been used for depth prediction. The problem with most of these approaches is that they use a repeated combination of max-pooling and striding in an encoder, which reduces spatial resolution. In addition, these approaches use information from all the channels directly from the encoder, which is prone to noise. Addressing these issues, we present a multigrid attention-based densenet-161 model. It consists of a multigrid densenet-161 encoder that increases the spatial resolution and an attention-based decoder to select the important information from low-level features. We achieved absolute relative error (Absrel) of 0.109 and 0.0724 on NYU v2 and KITTI, dataset respectively. Our proposed method exceeded most evaluation measures with fewer parameters compared to the state-of-the-art on standard benchmark datasets. We produce a dense depth map from a single RGB image which can be used to create a dense point cloud. The anticipated depth map is accurate and smooth, which can be used in several applications.

KEYWORDS:

- Convolutional Neural Network (CNN), depth estimation, dilation rate, multigrid, attention mechanism, depth map

CNN-BASED CONTINUOUS AUTHENTICATION OF SMARTPHONES USING MOBILE SENSORS

With the advancement of technology, the smartphone has become a reliable source to store private details, personal photos, credentials, and confidential information. However, smartphones are easily stolen and it has become a suitable target for attackers. Usually, smartphones require only initial explicit authentication, once the initial login is passed all the information can be accessed easily. Hence, this paper proposes an efficient implicit, continuous authentication of the smartphone based on the user’s behavioral characteristics. We propose architecture to differentiate legitimate smartphone owners from intruders. Our model relies on the smartphone’s built-in sensors like an accelerometer, gyroscope, and GPS. The sensors respond according to the user’s behavior which is recorded by the smartphone. We have used the rest filter model to separate motion data from rest since the rest data does not contain much information about the user’s behavior. We have used Xgboost and Convolutional Neural Network as our rest filter and legitimate-intruder classifier respectively. Our system can predict legitimate and intruders in a few seconds. Our proposed CNN model has an achieved average accuracy of 95.79% in our custom dataset, which has further improved after integrating GPS data.

KEYWORDS:

- Implicit continuous authentication, Convolutional Neural Network, Xgboost classifier, Accelerometer, Gyroscope